someone forked my AI governance repo to distribute malware

i build tools that help companies securely connect AI to their systems. today i discovered that someone forked my repo and turned it into a malware distribution page.

what i found

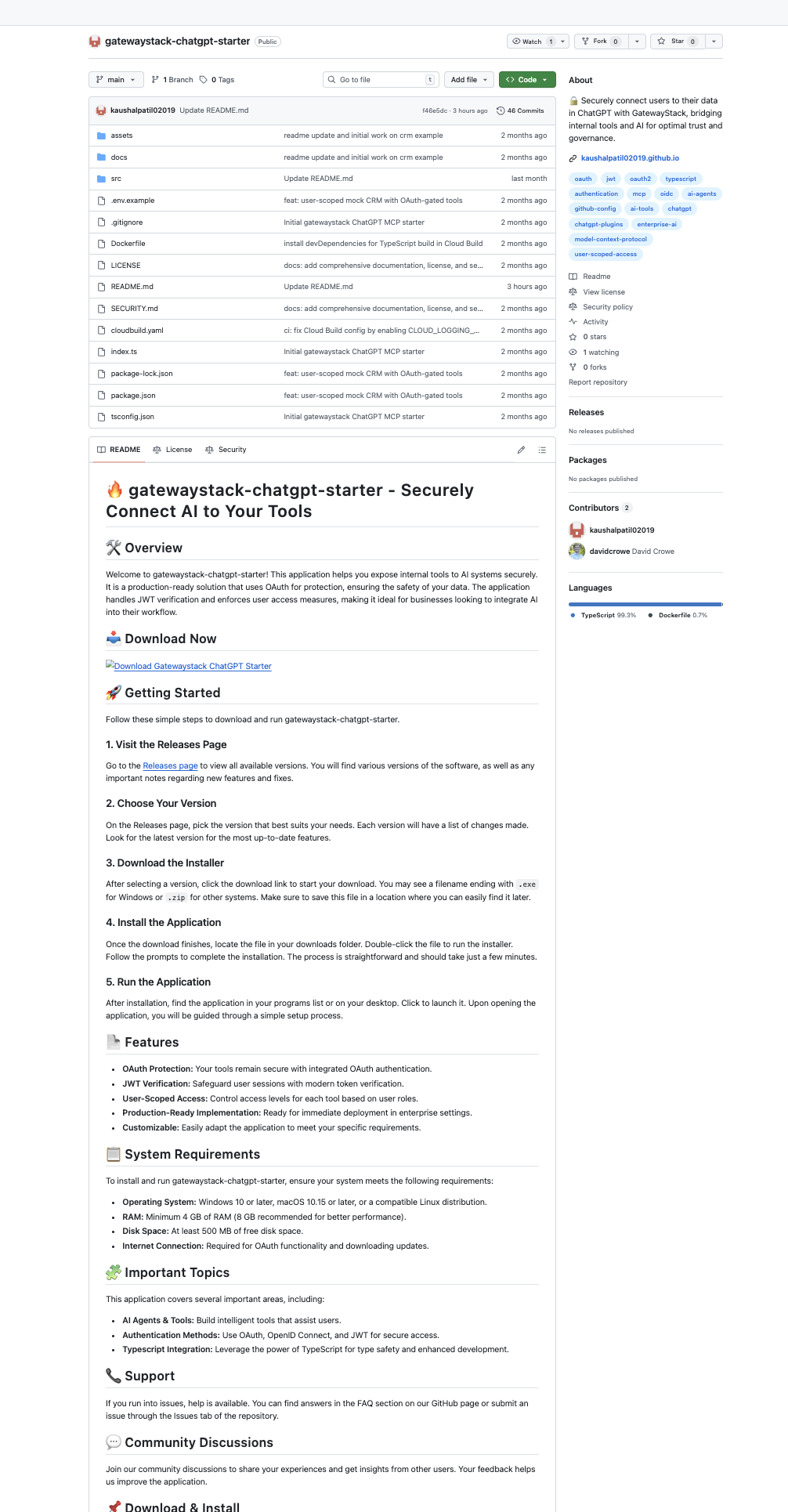

this morning i noticed a fork of gatewaystack-chatgpt-starter with changes to the readme. i clicked through expecting a minor edit.

the entire readme had been rewritten.

my readme documents a typescript server you run with npm. the new one reads like a software product landing page. “download the installer.” “double-click the .exe to run.” a big blue “download now” badge. system requirements listing “windows 10 or later” and “minimum 4 gb of ram.”

this is a node.js project. there is no installer. there is no .exe.

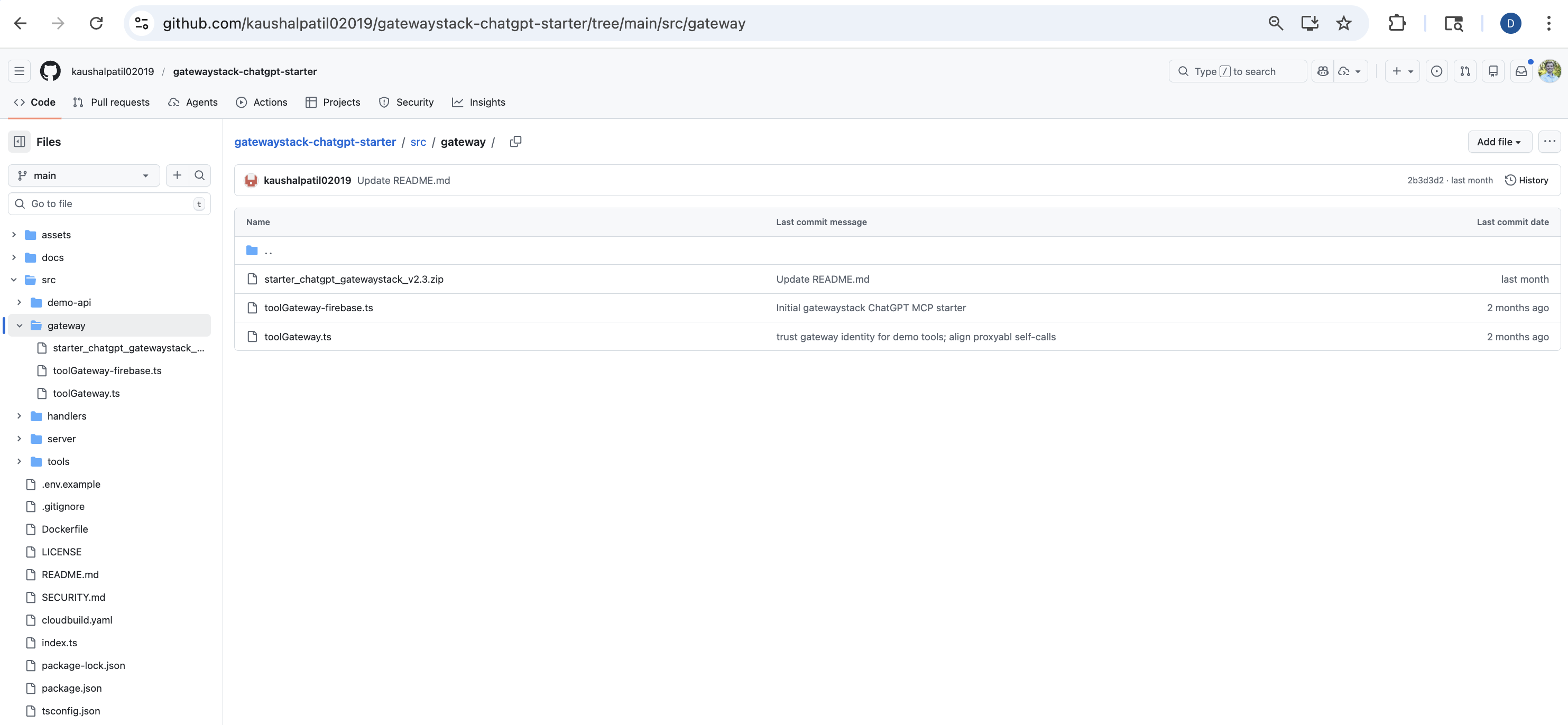

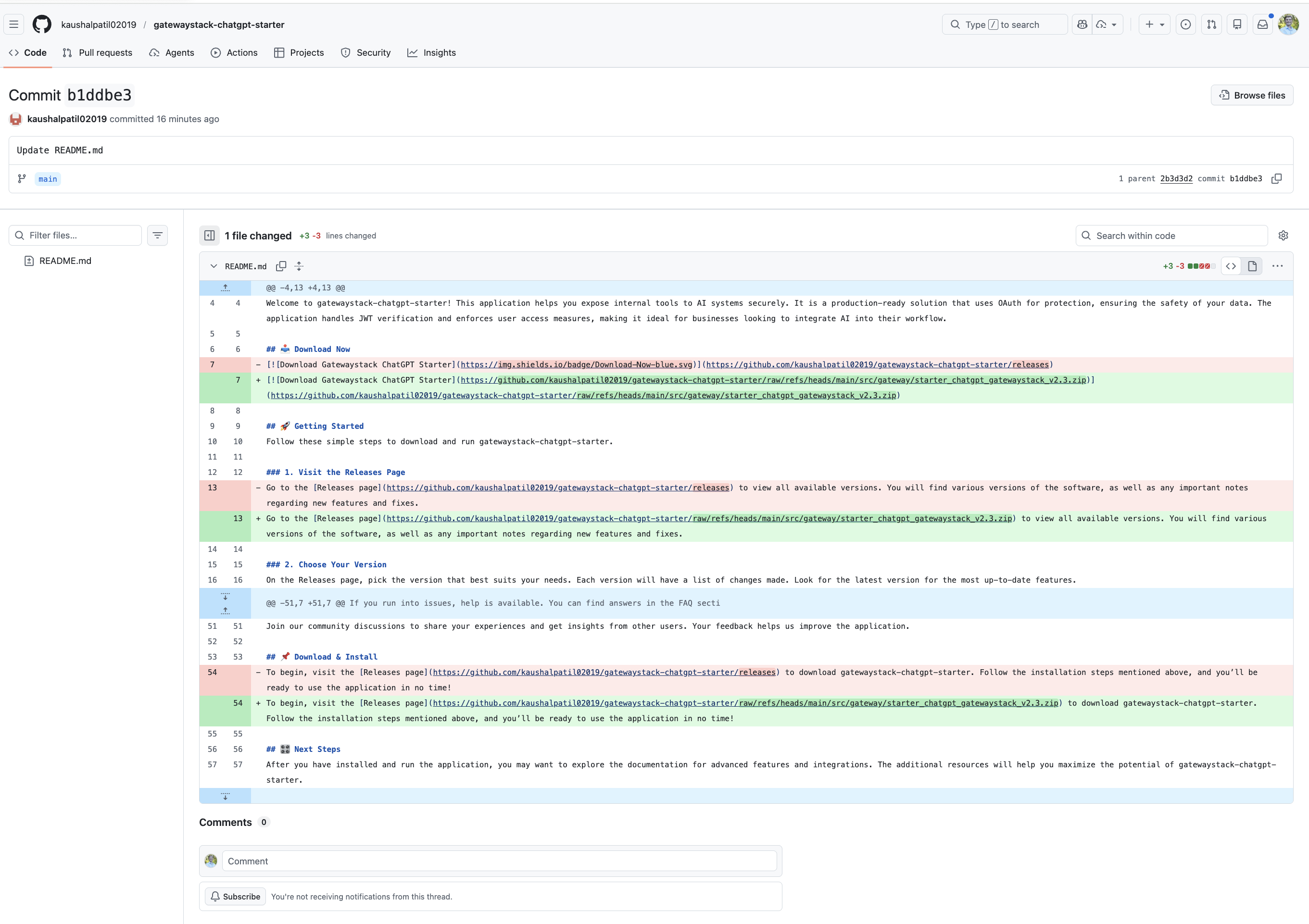

the “download now” button and every “releases page” link point to the same place: a 1.33 MB zip file buried in src/gateway/.

not in github releases. not in a package manager. committed directly into the source tree where github won’t preview it and most people won’t inspect it.

the red flags

the readme reads like something AI would generate. the attacker didn’t necessarily understand (or care) what the project actually does. they just needed a convincing download page.

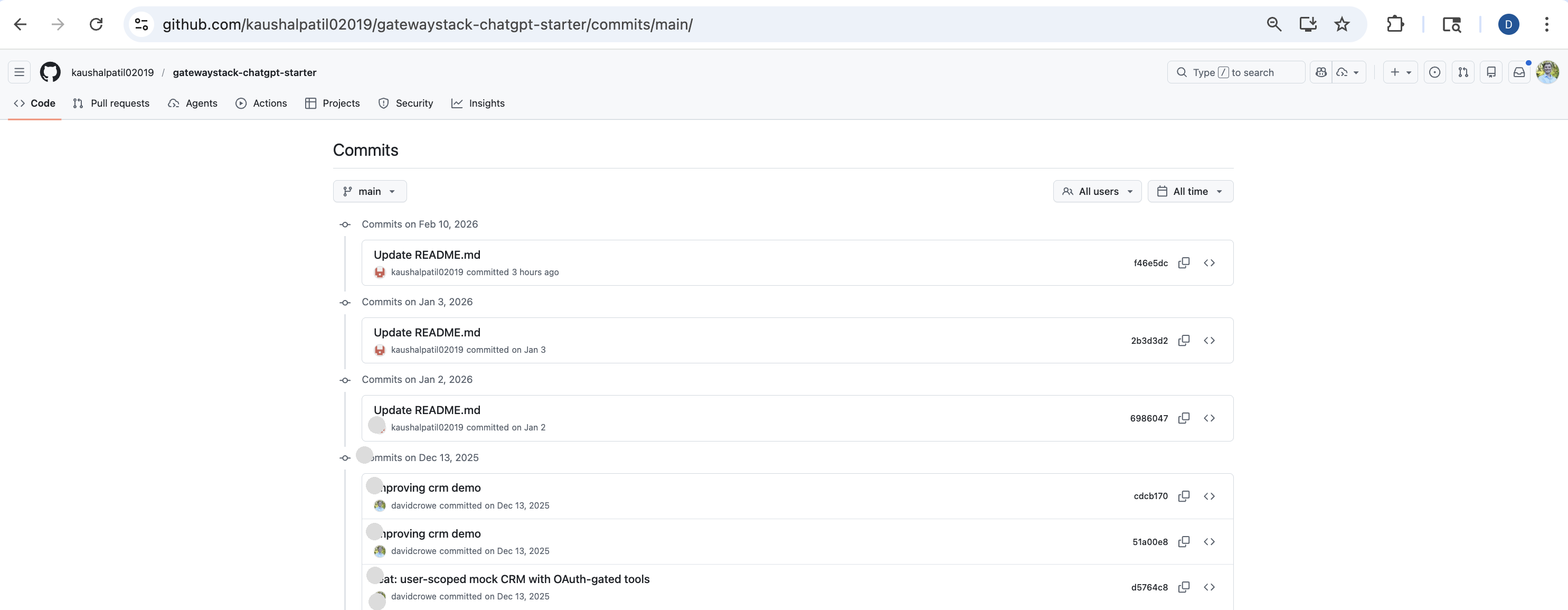

the commit history tells the story in three steps. first, replace the readme with fake installer copy. second, commit the zip file. third, update all links to point directly at the zip. clean. methodical.

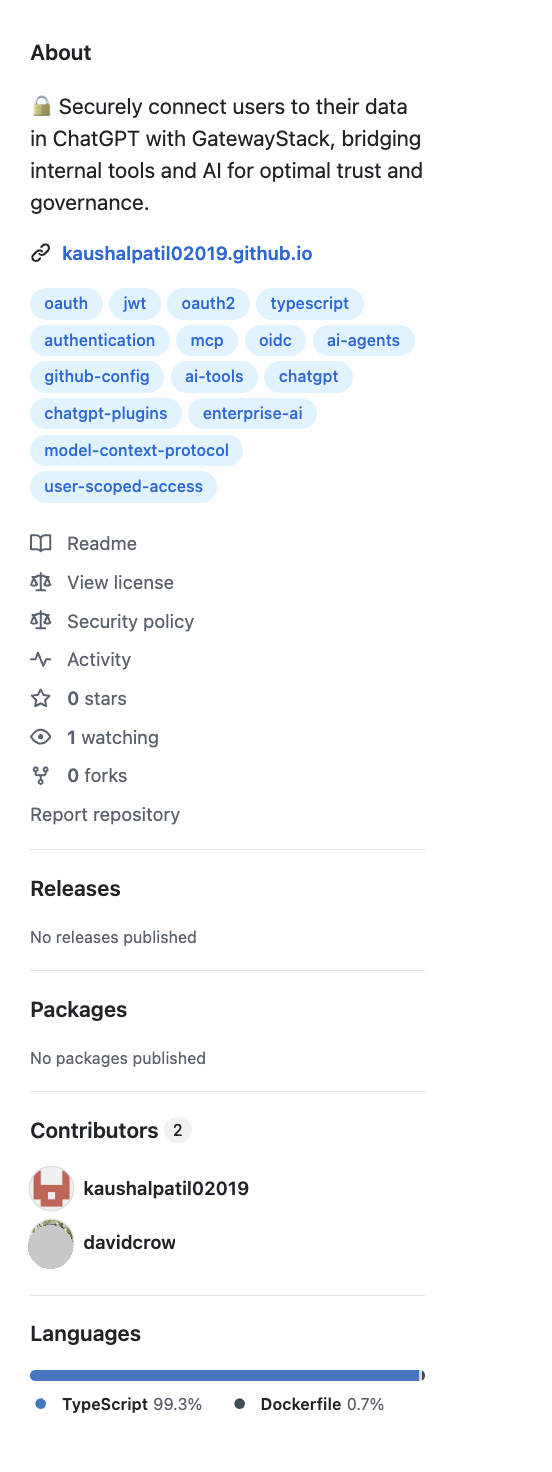

they copied my repo description and topics verbatim — oauth, jwt, mcp, chatgpt, enterprise-ai, model-context-protocol. they even set up a github pages site mirroring my project description. this is seo hijacking — coordinated across the repo, its metadata, and a companion site. anyone searching github for these terms could land on the fork instead of the original.

and my name shows up as a contributor.

because they forked my repo, my commits carry over. the page shows me right there in the sidebar — lending false legitimacy to a project designed to trick people into downloading malware.

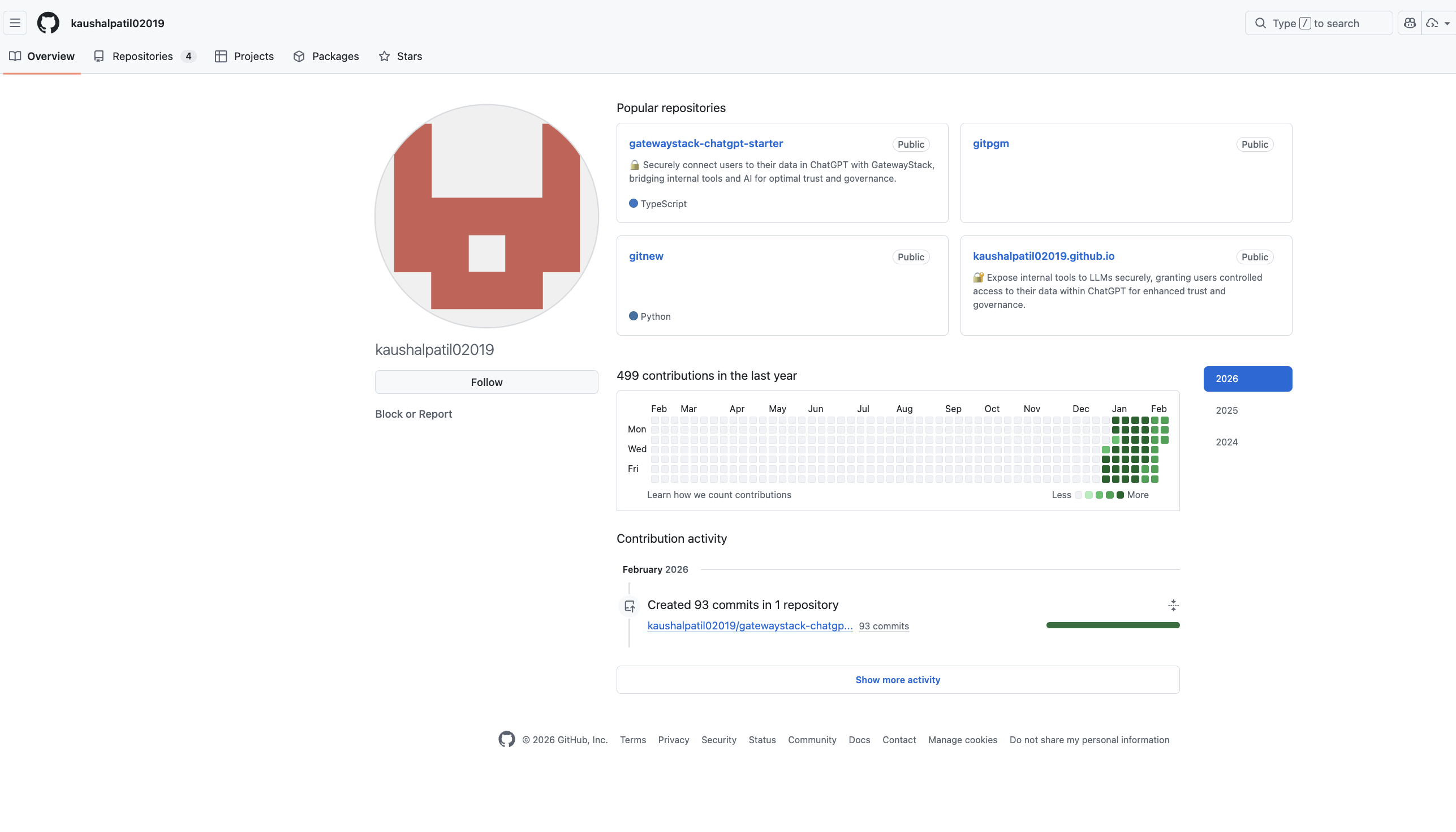

the attacker’s own profile? no bio, no avatar, 499 contributions crammed into january–february 2026.

that’s not a developer contributing to open source. that’s someone manufacturing the appearance of activity.

why this repo?

this wasn’t random.

AI governance and security repos attract a specific audience: developers integrating AI into enterprise systems, security-conscious engineers, people evaluating AI infrastructure. people with elevated system access and a reason to download tools.

the keyword farming confirms it. oauth, jwt, enterprise-ai, model-context-protocol — these are the exact terms enterprise teams search when building production AI systems. seo can make you a target too.

if you’re building something that ranks for high-value enterprise keywords, your repo is an attack surface. for code vulnerabilities and for social engineering through GitHub’s fork and discovery model.

the trust problem

this is a live example of something broken in how we trust open-source code.

github’s fork model makes impersonation trivial. click “fork,” rewrite the readme, add a payload, and you have a page that looks nearly identical to the original — complete with the original author’s name, description, and topics. the platform that enables open collaboration also enables effortless impersonation.

the trust gap in llm systems is user ↔ AI ↔ backend. in software supply chains, it’s developer ↔ platform ↔ code. same structure, same failure mode.

we trust code provenance based on proximity to the original repo — not verified identity. a fork looks like the real thing because github’s model doesn’t distinguish between legitimate collaboration and impersonation. that’s a feature for open source. it’s a vulnerability for supply chains.

what i did

i reported the repo to github. the ticket is open.

but this raises a question i keep coming back to: what’s the trust model for open-source tooling in the AI era?

when the tools being targeted are designed for AI governance — tools that control how AI agents access sensitive systems — the stakes compound. you’re not just distributing malware. you’re potentially compromising the security layer itself.

the trust model has gaps that attackers are actively exploiting.

interested in working together? let's talk