prioritizing genai initiatives

originally posted to linkedin

if your team only uses roi to prioritize genAI initiatives, you’re probably getting suboptimal results.

how can that be?

roi doesn’t factor in your appetite for risk.

let’s borrow some useful concepts from finance:

— ROI can be equal for projects with different risk levels

— the efficient frontier of portfolios that maximize expected return for a given risk level

— diversifying your portfolio helps maximize expected return for a given risk level

this type of portfolio thinking introduces a million dollar question:

how much risk will your org accept for genAI initiatives? after all, the greater the risk, the greater the reward.

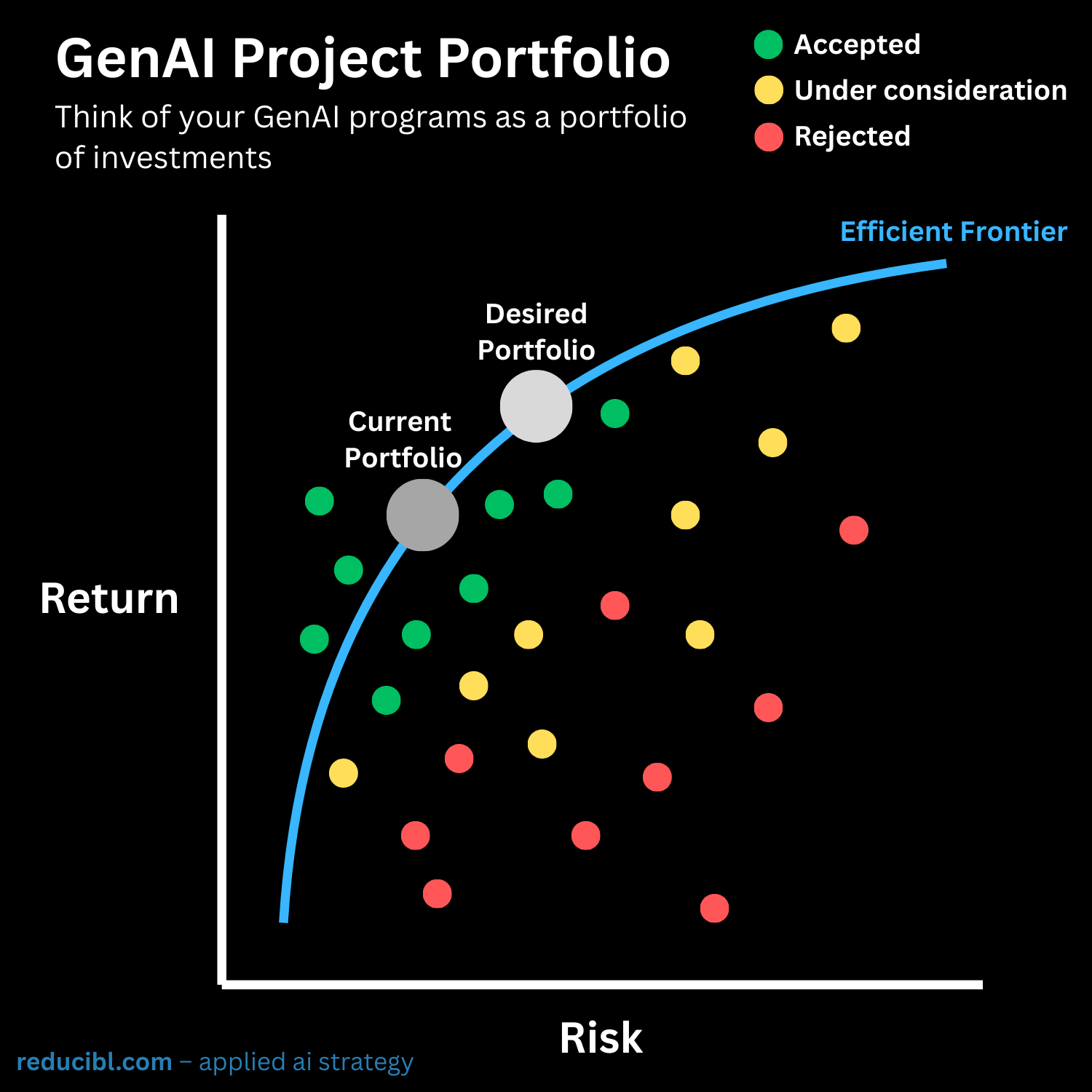

the point on the efficient frontier that matters most isn’t a project. it is the portfolio of projects:

- where your portfolio lands on the efficient frontier depends on your org’s risk appetite

- how you prioritize genAI projects depends on where you want to land on the efficient frontier

for example: a customer-facing AI chatbot might have high reward but high risk (brand damage if it hallucinates), while an internal doc search tool might have lower reward but also lower risk. both might show similar roi, but they fit different portfolio strategies. they might even be complementary in the right portfolio.

the exact mechanics matter less than the mental model: prioritization decisions should be made with visibility into how each initiative shifts the risk/reward profile of the overall portfolio.

what is risk, anyway?

at the project level, risk is the variance of expected outcomes for the project. at the portfolio level, you need to account for covariance… how the failure modes of individual projects are correlated.

if two projects share a dependency on the same data or are both exposed to the same regulation, they have some shared risk that should be considered. the last thing you want is all of your genAI initiatives failing at once.

and that’s why prioritization decisions shouldn’t be made in isolation based solely on project-level roi, but as part of a genAI portfolio strategy.

how does this work in practice?

to be clear, i’m not proposing that you literally calculate covariance for your genAI projects. rather, use the efficient frontier and portfolio theory as mental models.

in practice, you might set things up to estimate risk and reward from your genAI project intake form. plot risk vs. reward for accepted, under consideration, and rejected projects. consider where your overall portfolio sits on the efficient frontier, and where you want it to be.

have a leadership discussion about proposed projects at the individual level, then discuss them at the portfolio level. make sure there is a “single threaded owner” with full ownership of the final prioritization decision for the portfolio.

optimize roi? yes. and be sure to consider your risk appetite too.

does your team prioritize genAI initiatives at the project or portfolio level?

are your risk appetite and portfolio risk explicitly considered in prioritization decisions?

david crowe - reducibl.com - gatewaystack.com